Ahead of the (Yield) Curve

Building a high-frequency-trading system in the 1990s.

Introduction

If you’re reading this, you probably know me as a writer on data, investing and startups. Some of you may also know me as the co-founder of a tech startup myself — Quandl, a venture-backed company that was acquired by Nasdaq a few years ago — or perhaps as an angel investor. But I suspect very few know of my previous life, as a quant, trader and portfolio manager at a Japanese hedge fund. So I thought I’d write an essay about one particular adventure from those days. Read on!

“Better Lucky Than Smart”

My first job out of university was as a programmer-analyst at a Japanese hedge fund, Simplex Asset Management. I started work in August 1998. Exactly one month later, Long Term Capital Management, the world's largest and most celebrated hedge fund, blew up — spectacularly.

This was problematic, to say the least.

In the short term, it was actually good news for us. Our fund, newly launched at the time, proposed to trade many of the same quantitative strategies that led to LTCM's demise1. With LTCM out of the way, we were often the only capital chasing those opportunities. If we had launched 3 years earlier, we would have blown up alongside LTCM; 3 years later, and those opportunities would have been much diminished. As my boss liked to say, “better lucky than smart”.

But over a longer horizon, we were worried. LTCM’s collapse raised doubts about the fundamental viability of the class of strategies they, and we, traded. Luck wouldn't suffice; we needed a Plan B.

This is the story of that Plan B. I’d like to say it was completely intentional and strategic, but the reality was much more exploratory and emergent. At the end of it, we discovered we had built something for which there wasn’t really a name at that time: a system that tracked market prices, ran models, identified opportunities, designed trades, processed tickets, managed hedges, and exited positions — all in a matter of seconds.

Seconds? Yes, seconds. Laughably slow compared to today’s HFT systems; miles ahead of the market back then. Our proto-HFT system drove most of Simplex's trading activity (and profits) for the best part of a decade. I was one of the lead builders and main traders on the system; here's how it all happened.

PART ONE: SETTING THE STAGE

Why Genius Failed

LTCM and Simplex both specialized in ‘convergence trading’ — building quantitative models of relationships between different securities; placing bets to exploit inconsistencies (‘mispricings’) in those relationships; and profiting when those inconsistencies resolved (‘converged’).

But what happens if the mispricings don't converge? Or even worse, if they diverge? You can double down on your trades, but as Keynes memorably (if apocryphally) said, markets can remain irrational longer than you can remain solvent.

This was what happened to LTCM. In the summer of 1998, their portfolio was buffeted by a perfect storm of adverse market moves, seemingly across every single position they held2. This was no coincidence; instead, it reflected the pattern — not captured in LTCM’s historical data — that in a crisis, “all correlations go to one”. Many other investors had very similar positions to LTCM, and were heavily levered to boot; when they stopped out of those positions, it caused all of LTCM’s trades to diverge simultaneously. LTCM didn’t stand a chance3.

We observed all of this at Simplex, and were determined not to suffer the same fate. But it seemed an inevitable part of convergence trading. The risk profile of this strategy is often described as “picking up nickels in front of a steamroller”: you can be smart, and agile, and nimble, but eventually you’ll get squashed.

We needed something new. Ideally, something that:

didn’t rely on market-to-model convergence

didn’t use excessive leverage

didn’t correlate to other investors’ positions

It seemed impossible. It wasn’t.

The Big Idea: Monetizing Noise

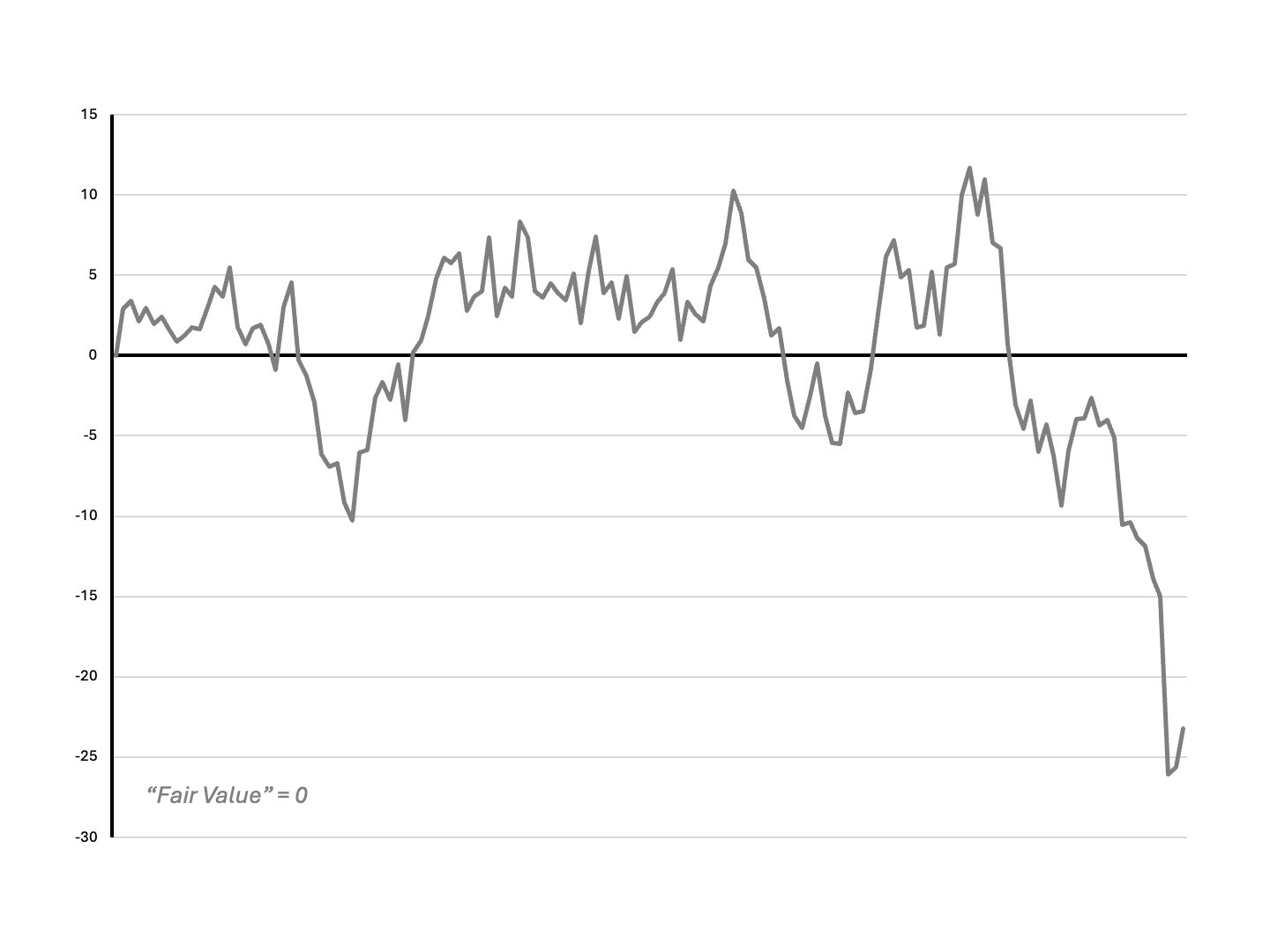

Let’s say you have a model that identifies or predicts a relationship between two or more securities. Deviations from this relationship can then be modelled as a ‘spread’ — the difference between two bond yields, say, or a bond and a bond future. As long as the relationship holds, the spread should ‘mean-revert’, i.e. it should return to zero, like this:

And this means you can build a trading strategy around it. You buy (sell) every time the spread goes below (above) a certain threshold value, and exit when it converges to zero.

This is the classic Salomon-LTCM style of trading: you make money for a while, but eventually the market diverges instead of converging, and you blow up. (Even if it converges again later.)

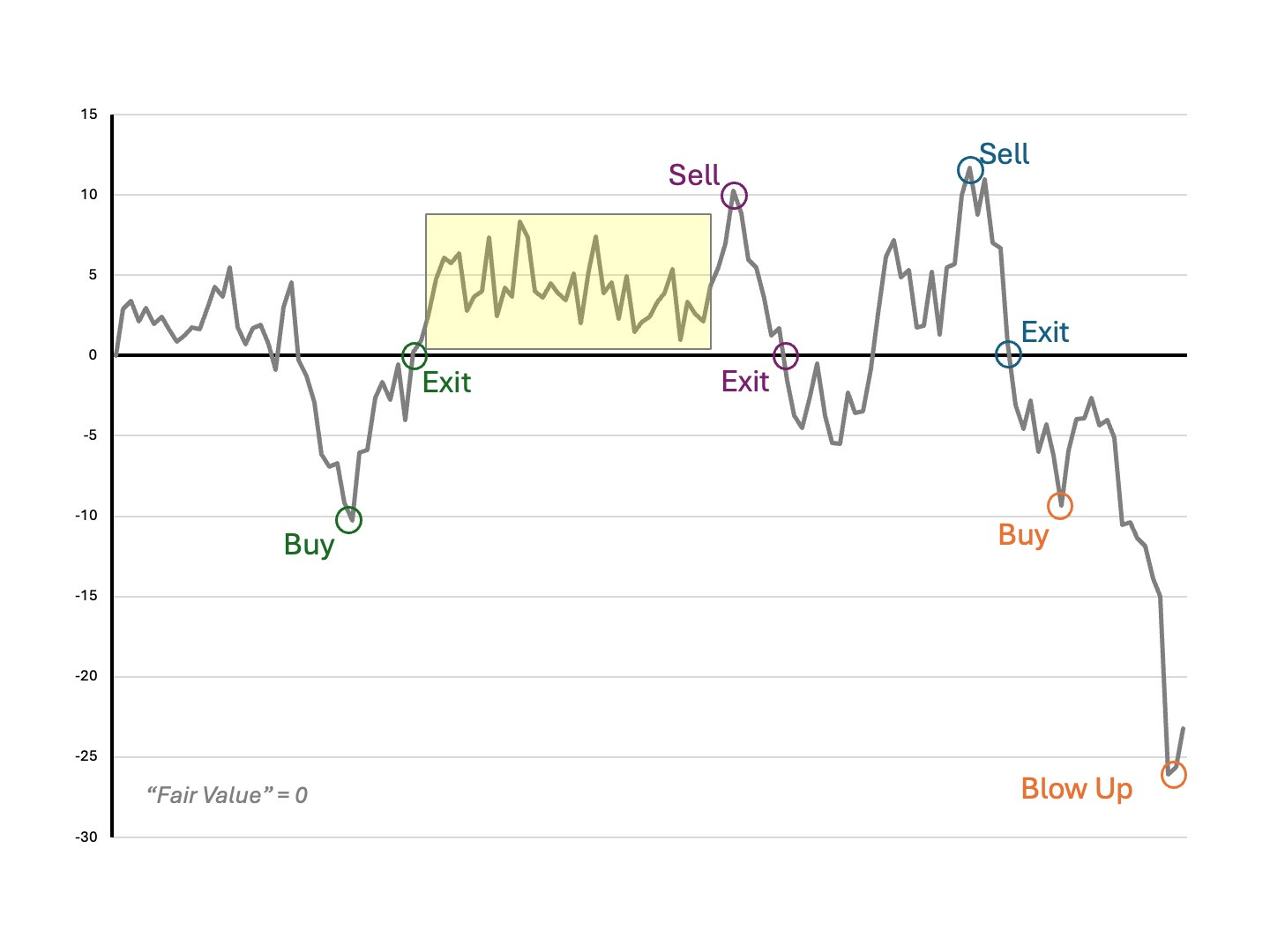

But let’s zoom in on the middle section of this graph, highlighted below:

Notice that although the spread never converges during this window, there are nonetheless a number of peaks and troughs. What if we could trade this noise — sell all the interim peaks, buy all the interim troughs? We’d make money despite the lack of convergence!

This might seem blindingly obvious today, but believe me, it wasn’t so at the time. Extremely smart, mathematically skilled, financially sophisticated quant researchers and traders would get hung up on two questions: “How can it possibly make sense to buy the spread when it’s above zero — that trade has negative expected value!” and “How can you possibly make money with a convergence model if the spread never converges?”.

And that was just our colleagues on the desk! Getting our investment committee, risk management, institutional LPs, prime broker and others on board took material time and effort. We had to write multiple research notes, run simulations and back-tests, show actual live trading results, and people still didn’t understand how this could work. Old habits die hard.

The End of Bid-Ask

Of course, saying you’re going to trade the noise is one thing. Doing it effectively is another.

The problem, as always, is bid-ask. The more granular the noise you want to target, the harder it is to overcome transaction costs. If you’re paying 1bp to enter or exit a trade, you need 2bps of opportunity just to break even, and at least 3-4 times that to make it worth the risk. (This is why there’s an entry threshold for your trades). In the late 1990s, the market was inefficient, but it wasn't that inefficient; the oscillations we were trying to capture were much smaller.

But we found some techniques that worked4:

Instead of trading spread constituents directly, trade liquid proxies. What you give up in precise targeting, you make up in round-trip trade count.

Layer strategies on top of each other, and trade only the ‘net change’ across multiple strategies.

Leg into multi-sided trades opportunistically. Randomize this to minimize directional risk.

Track block trades to anticipate short-term hedging flows by market-makers.

React faster than the competition to price discrepancies across venues. (This was still possible back then!)

Trade a lot during volatile markets; enter or exit convergence positions while the rest of the market is still reacting to macro events.

Maximize trade count in both time (frequency) and space (strategies).

Realize that a rough hedge done instantly is superior to a perfect hedge that takes time or costs money.

All of these pointed to a single conclusion: we needed rapid, automated execution across a portfolio that traded half a dozen different (but overlapping) convergence signals in a liquid market.

Now we had to build it.

PART TWO: THE DEVIL IN THE DETAILS

There’s Treasure in Treasuries

We decided to start with US Treasury bonds, a market that was efficient enough for quant modeling to work; volatile enough for noise-trading to be feasible; and liquid enough for zero bid-ask to be achievable.

Liquid and efficient it may have been, but technologically, the bond market was still stuck in the 1980s. The vast majority of trades were done on the phone. Electronic venues were fragmented and opaque; electronic execution was less than 10% of the market. Only a handful of participants knew how to price the Treasury yield curve correctly; those that did, typically relied on overnight model runs, with Excel spreadsheets for intraday updates. (And this included traders at the world's biggest banks and asset managers). A perfect market for us!

Data Rules Everything Around Me

The first step was getting the data. The first step is always getting the data5.

In those days there was no single convenient API we could use to get bond market data6, so we brute-forced it:

We asked 5 different investment banks (the ones with the biggest Treasury franchises) for their morning, noon and end-of-day pricing runs, that they used to mark their own books.

(We eventually asked each bank to do this for each of their main desks — TKY, LON and NYC — for more coverage).

Sometimes these were in plaintext, sometimes these were images or PDFs or other document formats. OCR tech wasn't great in those days, so we had two separate back offices, in Tokyo and Hong Kong, manually entering prices from these runs.

We did automated intraday screen-grabs of all the major (executable) trading venues.

We did the same for our Bloomberg and Reuters terminals, focusing especially on prices around the market close.

Our parent firm had multiple prime brokerage relationships (for different funds, trading strategies, geographics), and we got prices from them as well.

Of course we then had to error-correct, remove outliers, fix systematic biases and so on7. Since prices for most bonds were quoted as a spread to a handful of ‘benchmark’ issues, we also had to normalize all our quotes so that the benchmarks were aligned. And then we had to distill all this raw material into a single ‘golden’ time-stamped price for each bond.

We're not done yet! Treasuries, unlike equities, have a fixed maturity. In modelling terms, this means that bonds are not the same from one day to the next. Today you have a 10-year bond; tomorrow it's a 9-year, 364-day bond. For perfect day-to-day model consistency — which will become important in the next section — we needed to construct a universe of ‘virtual’ bonds with constant maturities, that were linear combinations of actual bonds.

This turned out to be non-trivial, since the virtual bonds had to obey a set of conditions that were hard to satisfy simultaneously (well-behavedness, smooth weights, yield and coupon matching, sparse data and asymmetry handling). I spent quite a lot of time figuring out the math on this8.

Good isn't Fast Enough; but Fast is Good Enough

Our workhorse was a yield curve model we called N3, short for ‘Normal 3-Factor’. N3 was a viciously non-linear set of coupled differential equations that, given 12 input parameters, would spit out the yield at any maturity. 8 of the parameters were constants, denoting unchanging structural aspects of the economy; we'd calibrate these about once a year, running an optimization (the EM algorithm) that took many hours to run, over a decade-plus of historical data. The remaining 4 parameters changed from day to day, reflecting the market's implicit values for 4 numbers that defined current economic conditions: the overnight funding rate, the expected (real) growth rate, the expected inflation rate, and the risk premium9.

The classic way to use N3 was, we'd pick 4 ‘anchor’ points on the yield curve — interest rates at constant 1-week, 2-year, 10-year and 30-year maturities — and use them to solve for the 4 daily-changing parameters (4 equations, 4 unknowns). The model would then be able to predict yields at every other point; we could then buy (sell) bonds that appeared cheap (rich) relative to their predicted yields. (Taking into account coupon effects, cashflow timing, financing costs, liquidity and other niggly details of course.)

But solving was slow. Not the 6-8 hours that a full EM calibration would take, but 10s of seconds up to several minutes. That wasn't good enough for the opportunities we wanted to capture.

We spent a lot of time looking for ways to do this faster, both hardware and software. Nothing worked10.

And then, a breakthrough!

We realized we didn't need to re-solve N3 for each tick of market data. N3 was impossibly complex, but for small-ish moves, it could be approximated linearly. So we did a full solve every 60 minutes or so; during that full solve, we also calculated all the partial derivatives of each parameter with respect to small moves in each of the anchor bond prices. Then, for any market move in between our 60-minute resets, we’d just do a linear approximation — a simple matrix multiplication sufficed to generate the new parameter values, and another one sufficed to generate the predicted yields elsewhere on the curve11.

We applied this conceptual breakthrough throughout the workflow. Every intraday calculation (actual bonds to virtual bonds, price space to parameter space, factor sensitivities, hedge ratios) was converted to a linear approximation. Matrices all the way down!

Structure and Infrastructure

So we had a system that could identify market opportunities fast. The next step was to hook it up to trade execution infrastructure.

There were (are) 3 broad categories of participant in the Treasury market:

Clients: asset managers who wish to buy and sell bonds (including hedge funds like Simplex)

Dealers: the big banks, who facilitate those trades by making markets and holding inventory

Brokers: specialist firms who provide an execution venue for inter-dealer trades, but don’t trade or hold inventory themselves

Back then, client-dealer trades were entirely done by voice. Dealer-dealer trades were sometimes voice, sometimes electronic. Some of those were direct, others went through the brokers. And finally, client-client and client-broker trades didn’t exist: dealers guarded their intermediary position jealously.

We did an end-run around them. We convinced a few brokers to allow us to trade directly on their electronic platforms, giving us access to liquidity at a speed the dealers couldn’t match. A couple of the electronic platforms also had automated ticketing systems, which was great.

Seven Seconds Or Less

But we couldn’t do without the dealers. They still controlled the largest pools of liquidity, and for many bond issues they were the only game in town. And their flow desks transacted with clients exclusively on voice.

We needed a way to execute voice trades faster than anybody else.

This ended up being classic workflow engineering. We figured out the essential actions that went into a voice trade, and automated as much of it as we could:

identify an opportunity that required dealer (not broker) execution

design the trade: legs, directions, bond IDs and notionals

auto-generate a request-for-quote message

paste the message into Bloomberg and send it to a dealer salesperson

receive the quote and parse the prices

pick up the phone and say ‘done’ or ‘nope’

get a trade ticket from the dealer and parse that

feed the trade into our portfolio

Most of these steps were automatable; the only one that required a human was sending the BBG message, and saying yea or nay to the reply. The hardest part was training dealer salespeople to quote prices in a consistent format so that we could parse them automatically. (Oh what would I have given for an LLM to do that for us). We ended up building a proto trade capture tool — a widget where you could paste the dealer’s message and the app would extract all the details and flow it through the system. We had one for live quotes, and another for trade confirms, and they worked astonishingly well.

We didn’t quite get it down to seven seconds or less, but we were pretty damn fast.

Happenings Under the Hood

There were lots of smaller hacks that accompanied these broad brush strokes:

Our status monitoring machine had no sound card. I hacked its bootup beep (using clock cycles) so that it could play a tune, if the tune was written down as frequencies. From then on, whenever the system went down, the Imperial March would beep through the office.

Because of database speed and field and size limitations, at one point we encoded the entire swap market into a single ‘binary large object’ (BLOB) which we could load into memory and query. (I cannot tell you how much database technology sucked in those days.)

We needed a UX to display positions, actions, hedges, live P&L and risk. Excel had a stream function that in theory could do this, but it was slow, prone to hang or crash, and unauditable12. We found an Excel clone called MarketView which was optimized for quote streaming; it completely sucked at being a spreadsheet, but we didn’t care — we did all the calculations server-side, and used MV as a dumb display.

We initially thought we'd build and trade the system from our head office in Tokyo, but I eventually moved to NJ and set up a satellite trading office there. An early example of co-location!

Sidetracks and Surprises

There were also some misadventures along the way:

That time our super-automated trade reconciliation system emailed all our positions to all our counterparties instead of to our back office. Amazingly, this bug was offset by another bug, introduced by the same code update, that bricked our email server. So we lived to trade another day13.

That time I messed up the zeros in a trade notional — to be fair, it was a yen trade, there were lots of zeros — and ended up executing a super-complicated multi-legged trade for a minuscule position. I caught the error the next day; for once, I was glad that the market moved against me, because I was able to add the full (intended) size at a much better level14.

That time the whole trading system had to be paused for weeks because of a devilish bug: a low-level optimization routine kept flipping between two equally valid solutions (it was a ‘slightly’ under-determined system) — we eventually figured out it was due to an unstable interaction between our annealing algorithm, our random number generator, and the way eigenvalues work.

That time — more than once, actually — that our prime broker called us in a panic, because their trading desk told them we had bought (or sold) many times our financing limit. They didn’t know that we had already offset those trades elsewhere — the idea that a fund could round-trip that amount of volume without facing ruinous transaction costs seems to have never occurred to them, and we weren’t about to tell them.

Not quite a misadventure, but an amusing sidenote: Each of our dealer counterparties, seeing the volume of business we were printing with them (but not knowing about the offsets), were convinced that we were financing our positions with their competitors15. So they began to offer us better and better deals to finance with them. This too became information we could trade against.

That time we discovered that it didn't matter where we set ‘fair value’ for any convergence spread: as long as the spread was noisy, our P&L was insensitive to its actual level. This obvious-in-retrospect discovery drove our academic research team slightly insane.

How Does It Feel To Win?

Switching to this new HFT approach was a slow process. We probably spent a year thinking about and around the problem, doing research, building prototypes, back-testing, solving various mathematical details etc. Another year building the actual core system. And then another year gradually ramping up our trading volume, figuring out areas to automate, ironing out bugs, getting ready for prime-time.

But it worked. And it worked better than we had any right to expect. In the US market, high-frequency curve trading quickly went from 10% to 50% (and in some months, 80%) of our P&L.

Even better than the P&L was the trading footprint. We discovered a major, major loophole in the system. Portfolio risk, credit limits, correlations — industry practice was to calculate all of these based on end-of-day positions. But our end-of-day positions were typically ‘flat’! The noise we were trading would mean-revert intraday, so all our buys and sell would cancel out. A counterparty looking only at our end-of-day book would conclude that we were taking very little risk, and therefore didn't require us to post much margin16.

It also meant we didn't need much financing, which meant very little overnight leverage: our return on balance sheet was excellent. And finally, our P&L was rarely correlated with close-to-close market moves, which meant it was rarely correlated with other market participants, even if they were trading similar models and strategies.

No requirement of model convergence; limited leverage or financing needs; minuscule margins to post; and low correlation with the rest of the market: this was the holy grail all right.

What was happening was that our proprietary, research-intensive, model-driven prop trading strategy had begun to take on the behavioural profile of a successful flow desk. This made a sort of intuitive sense: after all, we were consistently buying low and selling high and never holding trades for very long; the underlying logic may have been determined by a convergence model, but the trading pattern was that of a market-maker17. Indeed, we even started thinking of ourselves as ‘multi-security market-makers’, where our core models allowed us to span a wider range of hedges than any single-instrument or sector specialist.

It was, and I say this with all modesty, beautiful.

Markets Are Complex, But We Are Simplex

Yes, this was our unofficial motto. Yes, it’s terrible.

With the core infrastructure in place and proven to work, we wanted more. We added layers upon layers to our stack:

N3 was a great model, but it was just one model. We realized that if ‘fair value’ doesn’t matter and if ‘market-to-model convergence’ doesn't matter — if the only thing that matters is intraday noise — then any model that consistently identifies intraday noise should work in our system. So we added multiple models and ran them in parallel; some of these were economically-founded, others were just good old fashioned correlation hunting; all data, no math.

We also started trading more instruments: swaps, eurodollars, futures, options, cross-currency basis — anything and everything that exhibited any sort of intraday correlation or mean-reversion versus our core Treasury book, as long as it could be traded fast. (But we never ran a truly global book, even though the math made sense; we felt that there were too many ‘unknown unknowns’ and asymmetric macro risks to get comfy with.)

We became a lot more sophisticated about market impact: when to trade and how much; red flags and green flags in market structure; half-life of opportunities and residual flows; seasonal (really, diurnal) effects; how to trade around macro events and more.

Small But Mighty

Given how ambitious this project was (in technical scope, in dollars traded, and in novelty), we accomplished all of this with an astonishingly small team:

TB was the senior portfolio manager and driving force behind the project; he's now a senior exec at BlackRock.

HL was the lead programmer and architect building the system; he subsequently became CTO at a couple of other hedge funds.

RO and GG were junior analysts who did a lot of the block-and-tackle work on coding and data; they eventually started trading on the system we built, and they’re both successful porfolio managers today.

As for my role in all of this: I bridged trading and tech. I was a trader and portfolio manager who was also technical, and so I did a lot of the model / data / strategy R&D and built many of the prototypes; I was also the most consistent day-to-day trader on the system once it went live.

Five people! Small teams punch above their weight, always.

Looking back, one other thing that leaps out at me is how impossibly young we all were. TB and HL were the senior citizens on the team; I don't think either of them had reached 30. I was 24, and clad in the invincibility of youth. RO and GG were even younger, fresh out of undergrad.

All Good Things

But alpha decay is inexorable, and all good things come to an end.

I mentioned how our HFT book went from 0% to as much as 80% of our total P&L in its heyday. It remained at those high levels for a while. But then it then went back to around 20%, and stayed there.

What happened? Competition. I don’t think anything we built was truly unique or irreproducible, and I’m sure many others were experimenting with similar approaches; there was something in the air18. I know of at least one bank whose curve-trading infra was pretty much identical to ours, and no doubt there were multiple funds playing the same game. Round-trip opportunities of 1-2bp, which we’d reliably see at least once or twice a day, became rarer and rarer, before disappearing completely. Any mispricing was quickly counter-traded.

It was a good run while it lasted. I'm sure there were firms that invested resources only to go live right when the opportunity set declined; we were fortunate (both lucky and smart) to be ahead of the curve and thus get a few years of strong performance. We were also reasonably quick to recognize when the alpha began to decay, and pivoted into a different set of strategies, rather than trying to compensate with ever larger positions.

I continued to work at Simplex for a while longer, but eventually grew bored and quit. A couple of years later, I co-founded Quandl. But that’s a story for another day!

Toronto, Dec 2024

Our founding partner, like LTCM’s principals, was a graduate of the famed Salomon Brothers bond arbitrage desk; you can find him on page 44 of Liar's Poker.

The firm was subsequently Buffetted as well — Berkshire Hathaway offered to buy out the partners’ equity for $250 million in exchange for an emergency cash infusion. They rejected the offer, and ended up with next to nothing.

This paragraph is a dramatic over-simplication, but it captures the gist of events. Roger Lowenstein's book When Genius Failed goes into further detail. There’s also a whole library of research (both academic and practitioner) about LTCM’s demise, and its implications for arbitrage theory, efficient markets, risk management, real-world price behaviour (fat tails, correlations, risk regimes), flights to quality and liquidity, and much more.

We also found a whole bunch of techniques that didn’t work, the hard (expensive) way. There’s nothing better for learning than actual P&L, and nothing worse for self-delusion.

“It is a capital mistake to theorize without data” — as in, if you don’t have good data, say goodbye to your capital. (Sherlock Holmes, A Study in Scarlet.)

Is this dramatic foreshadowing? I think it’s dramatic foreshadowing.

My favourite such systematic bias was noting when a dealer consistently printed a high closing price for a specific bond — a clear indication that the dealer was long that bond in inventory. Thanks to our dataset, we could then trade against that information!

Bloomberg did, in fact, publish constant maturity yields, but their method was flawed. We figured out how and why, and very intentionally did not report the issue to them.

Pedantic aside: why was it called N3 when there were four factors? Because we decided to let ‘risk premium’ vary after seeing what happened to LTCM. This was one of the modelling decisions I’m kind of proud of; the senior quants in the firm really didn’t want to do this, for what I’d call philosophical reasons. My own philosophy here was Asimovian: “Never let your sense of morals prevent you from doing what is right”.

The high-performance hardware gathered dust in a corner of our office, until we off-loaded it to a tech startup just before the dot-com crash (what a great trade). On the software side, we tried a bunch of proprietary packages, and also downloaded bleeding-edge research code from various universities — this was before robust and reliable open-source libraries — it’s not a coincidence that pandas was developed at a quant hedge fund. We ultimately ended up deploying an impenetrable piece of code we dubbed ‘The German Optimizer’, that used “a slightly modified version of the Pantoja-Mayne update for the Hessian of the Lagrangian, variable dual scaling and an improved Armijo-type stepsize algorithm”. Now you know as much about it as I do.

This, by the way, is why time consistency is important: essentially, we were modelling each day’s market movements as small deltas (in N3 parameter space) from the previous day’s close; so we had to neutralize the effect of one day’s passage of time.

None of which stopped people from using it. This was just before the heyday of the ‘F9 Model Monkey’ — hitting F9 was the cue for Excel to refresh its calculations; the joke was that you could usually go for a cup of coffee or lunch or maybe even a short vacation while this happened.

Sharing leveraged positions with other traders is like throwing chum to sharks: you’re inviting a feeding frenzy. When my (normally very reserved and in control) head of desk found out, he threw his scientific calculator at his monitor, breaking both. I still remember that because it was so out of character.

Better lucky than smart.

If you don’t offset, you have to pay cash for your buys, or deliver securities for your sells. Hedge funds don’t usually have the cash or securities on hand; instead, they borrow/lend either/both from a dealer. This process is called financing, and dealers have ‘repo desks’ that facilitate this for clients.

Counterparties are more sophisticated these days. Think of it as evolution in action.

Any sufficiently advanced form of prop trading is indistinguishable from market-making.

All the pieces of the future existed in the market, even if they were unevenly distributed: yield curve models, fast networks, streaming infra, electronic execution, protocols for ‘straight-through-processing’ of trades. It just needed someone to put them together in the right envelope; for us this envelope was noise monetization + fast beats good + transformed risk profiles.

Great story - did you ever look at or use Wolfram? I remember seeing a US quant fund in the early 00s had built their end to end systems on it and it looked amazing.

Thank you for this story!